The Feldera Blog

An unbounded stream of technical articles from the Feldera team

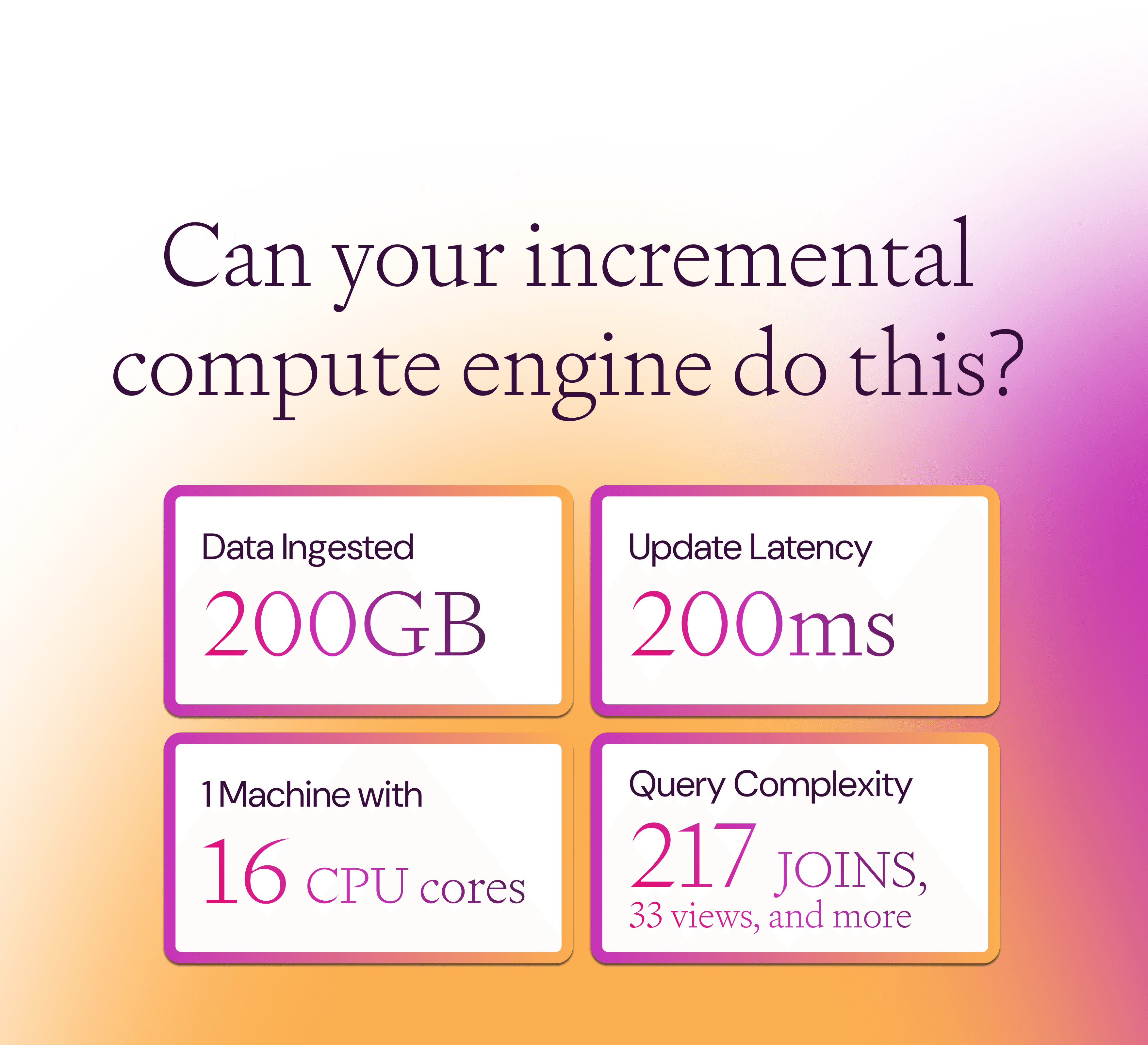

Can your incremental compute engine do this?

Handle 217 join, 27 aggregations, and 287 linear operators on a single 16-core machine using 15GB RAM at steady state. Here's the proof.

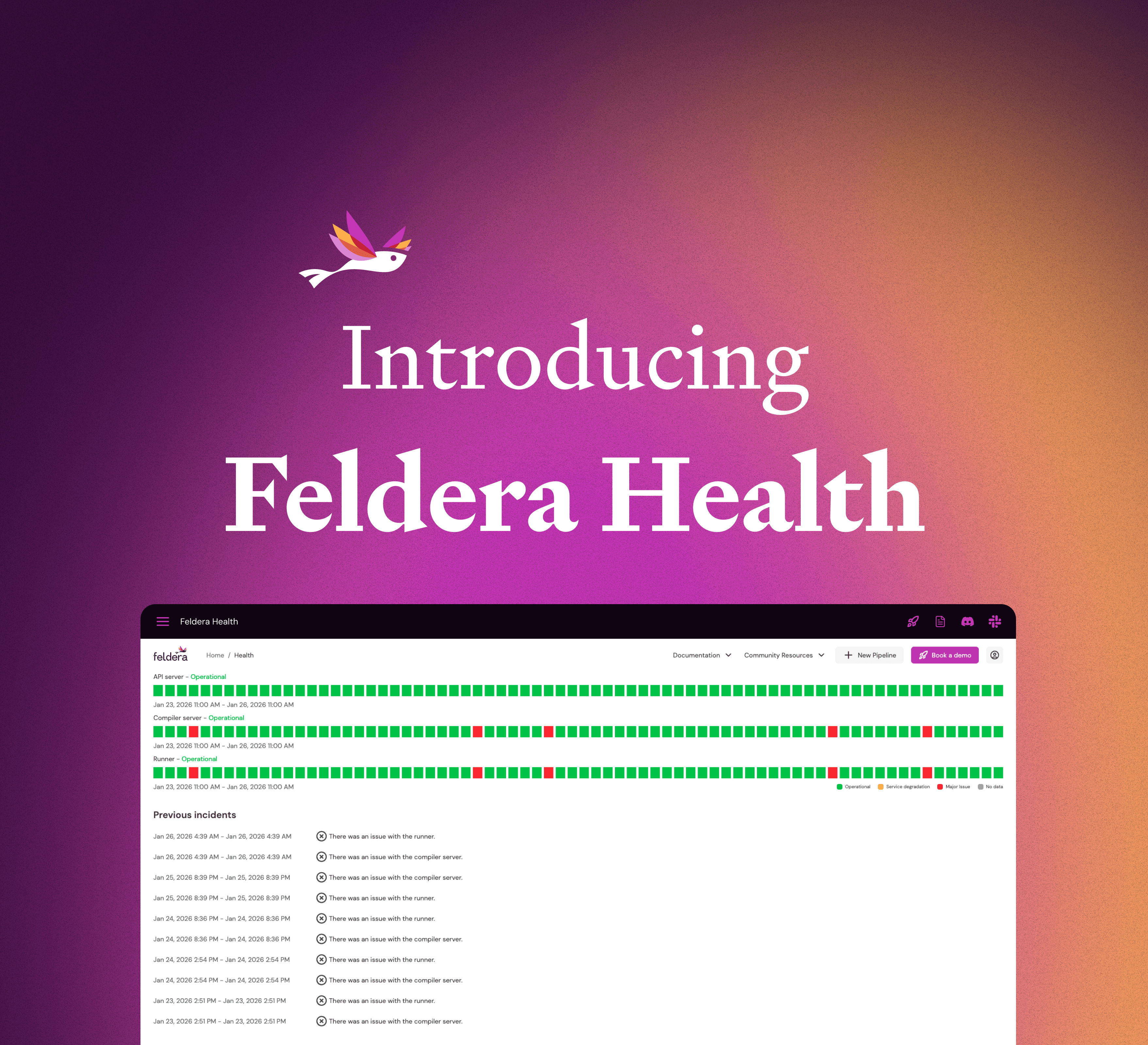

Introducing Feldera Health

Introducing Feldera Health: a lightweight dashboard that shows your infrastructure status without Kubernetes access. Get quick answers when pipelines fail.

Feldera in 2025: Building the Future of Incremental Compute

Feldera broke this 50-year barrier with incremental computation. A better way to create sophisticated analytics at the speed your data actually changes: real-time.

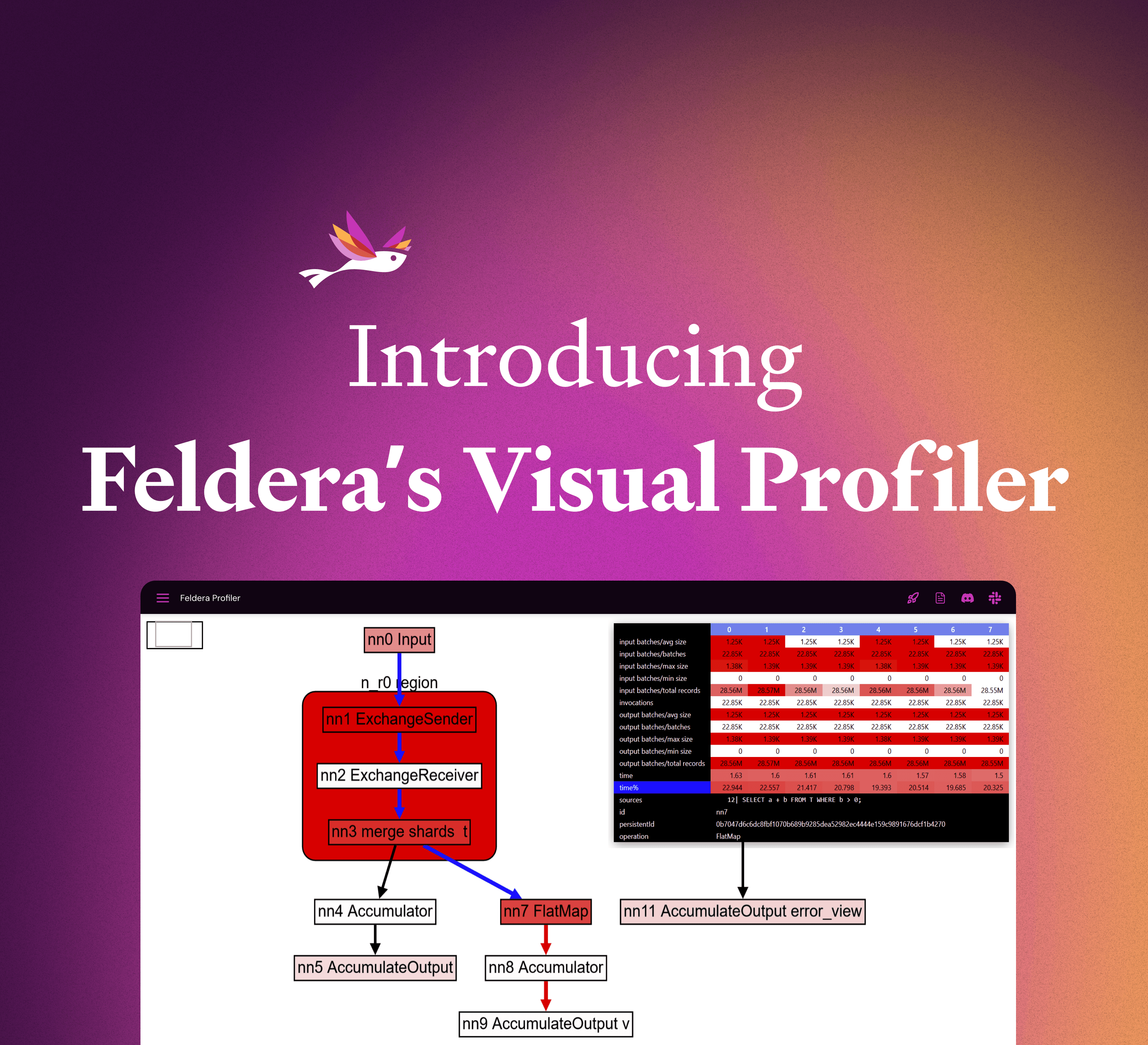

Introducing Feldera's Visual Profiler

We built a browser-based visualizer to dig into a Feldera pipeline's performance metrics. This tool can help users troubleshoot performance problems and diagnose bottlenecks.

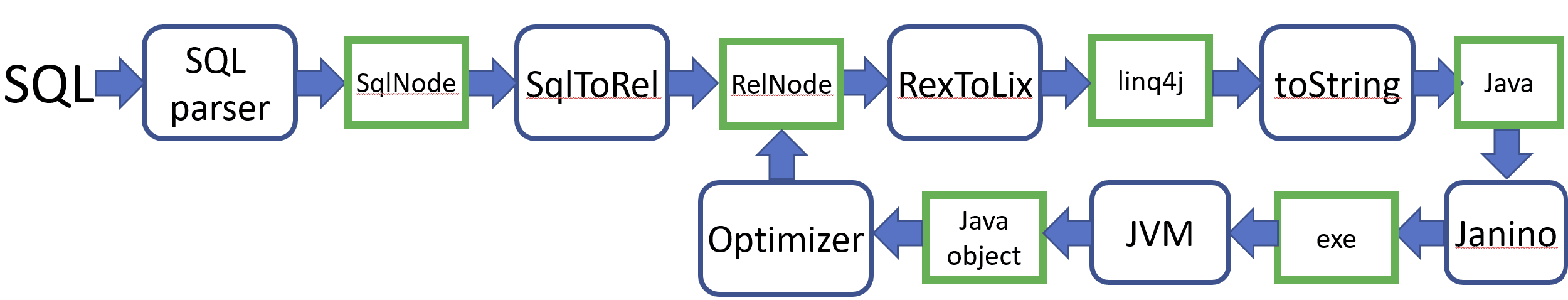

Constant folding in Calcite

In this article we describe in detail how the Calcite SQL compiler framework optimizes constant expressions during compilation.

The Dirty Secret of Incremental View Maintenance: You Still Need Batch

Using a simple example, we show why even a powerful IVM system like Feldera requires special care to make backfill efficient.

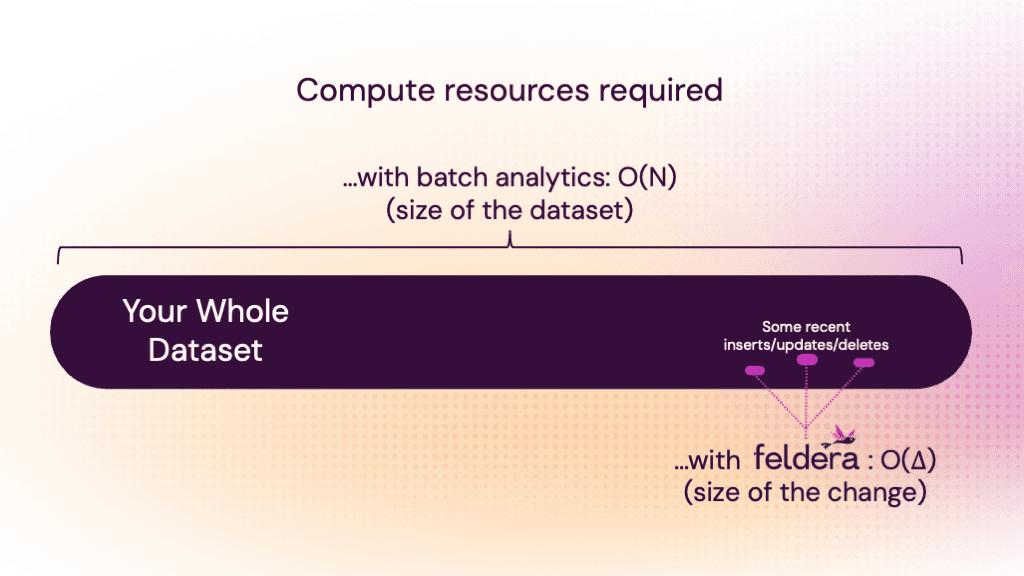

How Feldera Customers Slash Cloud Spend (10x and beyond)

By only needing compute resources proportional to the size of the change, instead of the size of the whole dataset, businesses can dramatically slash compute spend for their analytics.

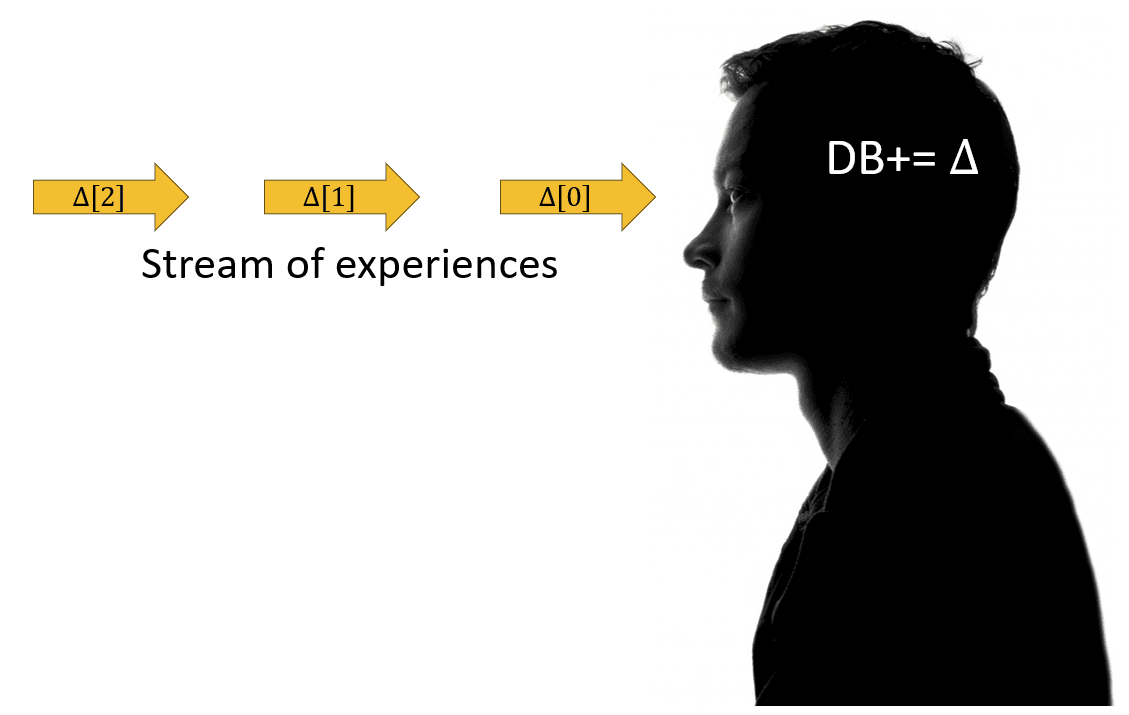

Stream Integration

In this blog post we informally introduce one core streaming operation: integration. We show that integration is a simple, useful, and fundamental stream processing primitive, which is used not only in computing systems like Feldera, but also by organisms to interact with their environment.

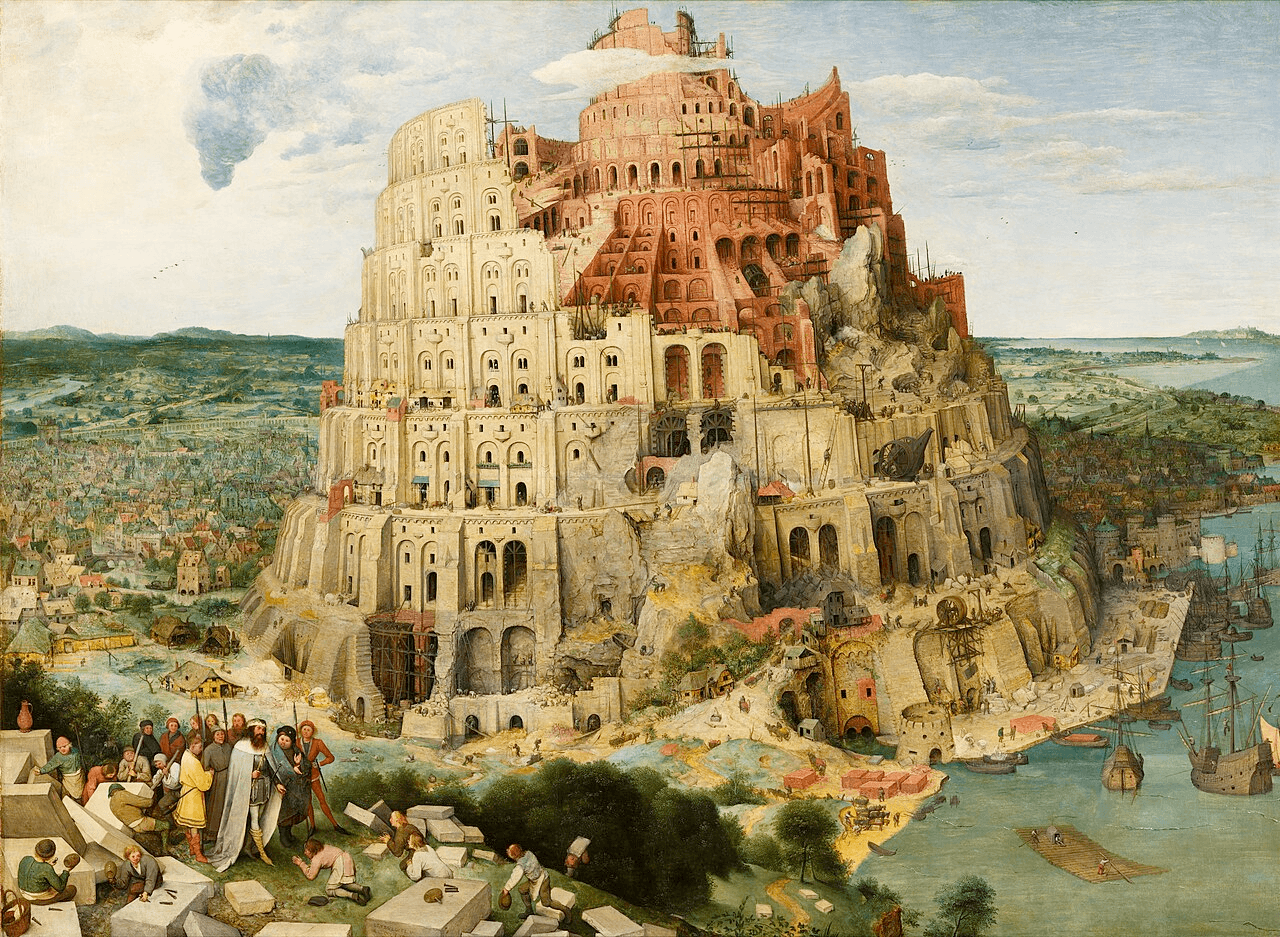

The Babel tower of SQL dialects

You would think that almost 40 years should be enough for all SQL vendors and implementors to have converged on a well-defined syntax and semantics. In reality, the opposite has happened.