Feldera's product is an incremental computation engine.

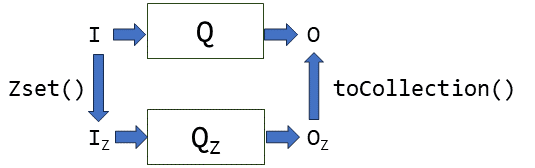

Users supply to the engine a SQL program; this program declares input tables and output views. The program is converted by the Feldera compiler into a fully incremental pipeline. The inputs to the pipeline are changes in the input tables; for each set of changes in the inputs the pipeline computes the changes to all the views.

The main value proposition of Feldera is that it does very little work to compute the output changes; the work performed is proportional to the size of the changes, and not the size of the tables. This is why we call Feldera "incremental": the "increments" in question are really changes to the tables and to the views.

The problem with traditional systems

When given complex queries, traditional databases (or other streaming systems) either resort in the end to full recomputation, which is very expensive, or give up.

When describing our technology to other people I have sometimes heard comments of the kind: "oh, we solved this problem 15 years ago, and we sold a startup doing exactly this many years ago."

I am skeptical of such claims.

Two products can claim to solve the same problem, but one may work flawlessly, while the other crashes and burns.

A real-world test

To illustrate the capabilities of the Feldera engine, I have taken a complex, real-world SQL program from one of our customers, and generated the query plan. The plan is shown in the above picture: there are 61 input tables and 33 output views. The plan contains 217 join operators (many of them are left joins), and 27 aggregation operators, and 287 simpler "linear" operators (linear operators include SELECT and WHERE in SQL).

The results

The generated pipeline runs great: after ingesting the roughly 200GB of data (250 million rows) from Delta Lake, the pipeline incrementally updates all outputs in about 200 milliseconds after receiving one (or multiple) input changes, running on a single machine with 16 CPU cores and using <30GB of RAM at peak and 15GB of RAM at steady state.

So, can your incremental engine do this?

See how we can handle 217 joins, book a demo.