Incremental View Maintenance (IVM) sounds like the holy grail: update your complex analytics instantly as data streams in. The problem? It breaks down the moment you leave the demo stage: every real system needs to backfill massive amounts of historical data before handling real-time updates, and most IVM engines choke when asked to do it. That’s why a production-ready IVM engine has to be a batch engine first.

This challenge, known as the backfill problem, is one of the biggest hurdles in building a production-ready IVM engine. Over the past few months, we’ve been working on a solution, and we’re about to announce it.

In this article, I’ll explain what exactly makes backfill tricky. Using a simple example, I’ll show why even a powerful IVM system like Feldera requires special care to make backfill efficient.

Example

Consider a SQL table that records the grades students receive in different classes. We define a view that augments each record with the average grade across all students in the same class:

create table grades (

student string,

class string,

grade decimal(5,2)

);

create view enriched_grades as

select

student,

class,

grade,

AVG(grade) over (partition by class) AS class_avg

from grades;We’d like to use IVM to keep this view up to date as teachers enter new grades in real time. But before we can do that, we first need to compute the current snapshot of the table — which may already contain billions of records (it’s a big school!). How do we go about this?

Here's one idea. We have an IVM engine that can handle changes efficiently. Why not break up the input dataset into many small chunks and ingest them one by one? Unfortunately, this strategy backfires. Consider the state of the system shown below:

Let's add two new records:

insert into grades values

('Carl', 'Algebra', 85),

('Carl', 'Physics', 97);Each new grade changes the class average, which forces the system to update every record in that class. Old outputs are deleted and replaced with new ones:

Now let’s add two more:

insert into grades values

('David', 'Algebra', 96),

('David', 'Physics', 91);Once again, both Algebra and Physics averages must be recomputed, triggering another full update of those classes:

What if we processed all four inputs at once? In that case, the engine would skip the intermediate updates and jump directly to the final result:

In both cases, the engine does work proportional to the number of changes (including changes to the input and output view). But when ingesting in smaller chunks, much of that work is wasted — intermediate updates are overwritten by later ones.

Takeaways

This example shows that the ideal ingest granularity depends on the workload phase.

- Backfill. The most efficient way to process historical data is as one giant delta. This avoids redundant updates and minimizes the time to complete backfill.

- Real-time updates. For streaming inputs, low latency is critical, so the engine must process small changes immediately as they arrive.

The problem is that IVM and streaming engines are optimized for handling small deltas that can be processed in-memory. They are more than likely to fail or run out of memory when handling a billion-record delta during backfill.

The Feldera solution

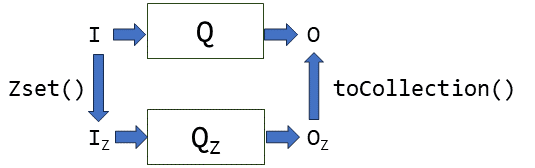

Over the past few months, we’ve taught Feldera to process very large deltas efficiently. Importantly, this required no changes to the underlying theory, algorithms, or data structures — all the mathematical guarantees of DBSP hold for both small and large deltas. Instead, we focused on the runtime: introducing new operators and optimizing existing ones to handle changes larger than memory.

That story, however, deserves a blog post of its own.