A common pattern I notice when I talk to customers today is that several of them have implemented incremental computation by hand, even though they don't realize it. They often compose complicated pipelines to handle batch and streaming data flowing in from different sources, using a combination of message queues, lakehouses, ETL tools, CDC systems, and both transactional and analytical databases. All of this work is done (consciously or not) in service of one goal: continuously update analytics to reflect newly arriving data, without re-running queries over all the data from scratch. In short, incremental computation.

Every time I see such an architecture, I am in fact reminded of an important period in computing history, the 70s. Specifically, a seminal paper in our field, Edgar F. Codd's work on relational algebra.

The 70s: Codd's relational algebra paper

Relational algebra gave us a mathematical notion of modeling data and queries on them with well-defined semantics. The semantics are key, because it gives us a recipe to describe how queries transform inputs into outputs, and also tells us how we can transform queries into equivalent but more efficient ones. It is what allows us to separate what a SQL query is supposed to achieve (intent), from how it is achieved (implementation). This mathematical underpinning as you know, changed the database world for the best.

To truly appreciate Codd's work however, you have to go back to what life was like without relational algebra.

Before Codd's paper took off, databases lacked what was called "data independence". An application that wanted to access data from a database had to be intimately aware of how the data is stored and represented (e.g., pointers, arrays, lists, specific on-disk formats), and it was impossible to evolve the data representation without breaking applications. This was ingrained so deeply in the database world that they couldn't believe Codd's idea could possibly work: look at these two excerpts from the famous rejection the paper got:

In this situation, any realistic model might end up requiring dozens of interconnected tables—hardly a practical solution given that, probably, we can represent the same model using two or three properly formatted files.

The main reason for using specialized file formats is efficiency: Data can be laid out in such a way that the common access patterns are efficient. This paper proposes a model in which, to extract any significant answer from any real database, the user will end up with the very inefficient solution of doing a large number of joins

Several years later, IBM would put out a few more seminal papers building on Codd's work, including System R, the implementation that took relational algebra from theory to practice. The rest as you know, is history.

2024: Déjà vu, we've been in this place before

I see the exact same pattern as above repeating itself today, but with incremental view maintenance (IVM).

I see engineers are forced to manually implement incremental computation throughout their IT infrastructure -- where instead of just writing queries that define a pipeline, we now have multiple systems that have to be stitched together, developed and operated independently to achieve a similar outcome. In effect, taking 6-12 months to build a solution that is hard to evolve, compared to a SQL program that could be developed and tested in a few hours. One where there is a purpose built complex distributed system that doesn't support changing the shape of the data or the queries easily. In short, custom built solutions without "data independence".

The other alternative is to use one of several systems built under the guise of streaming systems, that offer restricted dialects of SQL without well-defined semantics, again, forced by a lack of a proper mathematical foundation.

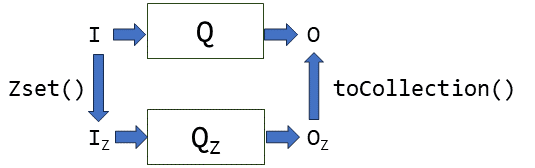

At Feldera, we seek to solve this problem once and for all, from first principles. Like relational algebra, DBSP is a streaming algebra. It is our award-winning work that gives us a recipe to incrementally evaluate any batch SQL query. Like System R was for relational algebra, Feldera is the first implementation of DBSP, and a pretty fast one at that! Without such a mathematical foundation, it is impossible to truly democratize incremental computation.

With Feldera, you can now finally separate what a query operating on streaming and batch sources is supposed to achieve (intent), from how the engine achieves its goal (implementation). It's what allows Feldera to uniformly support inserts, updates and deletes in real-time on arbitrarily complex SQL programs, operating on any number of data sources and destinations at once. It's also what allows Feldera to efficiently support scale-out, storage and fault-tolerance.

And as to whether the rest will be history? We don't know yet, but we do hope you'll join us to discover that together. Reach out to us below!